In the first part, we looked at conceptual implications of the various styles of object implications. Now we shall look at a few common implementations of object composition in conjunction with the concepts presented in the earlier post.

Association v/s composition

First off, we shall start with an example in C to understand the difference

struct node { int data; node *next; }; |

This is a fairly common definition of a node in a singly linked list of integers. Each node contains two elements: an integer and a pointer (logical reference) to the next node. The object layout looks as follows:

It is interesting to note that this logical layout would still be correct in most programming language implementations that have managed memory. Things get interesting when we go back to the definition of a rectangle as seen in the first post.

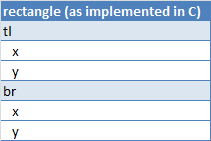

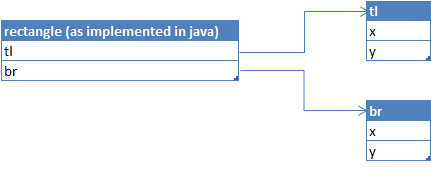

The difference should be fairly obvious: the rectangle in C contains two points whereas the rectangle in java contains references to two free-standing point objects. Again, note that the layout that is shown for Java actually holds true for any system where memory management is taken care of by some runtime. A non-exhaustive list includes, perl, php, python, shared_ptr in boost, etc. etc.

Implications of indirection

Additional usage of memory

The most obvious impact is that the rectangle class as implemented in memory managed style now uses two additional pointers to store references. This is the user visible overhead. There could also be a user invisible overhead in managing additional objects on the heap. To get an idea of what such overheads could be, one can look the implementation of heap allocation as described in “The c programming language” by K&R. Note that the overheads can be amortized by some very clever implementations or subsidized elsewhere but it does exist in some form or the other. The deeper the composition (i.e. object/struct tree is), the more of overheads we pay (both visible & invisible)

Slower access of fields

This is a lot more worrisome aspect than a larger footprint. Loading up of the rectangle object in memory does not mean that the referenced objects tl & br are also loaded into the same part of the memory. Here, the term generic memory is being used as opposed to RAM to signify any class of memory. For eg: it is possible that the rectangle object is sitting in the L1-data cache of the CPU whereas tl is actually sitting on disk since the page containing that object has been swapped out of RAM! In addition, an access to the logical top right corner goes as follows in case of indirection:

- Load contents of base address of rectangle + offset to tl into say “r”

- Load contents of base address of point (r) + offset to x

In case of inline composition, it simply would be be “Load contents of base address of rectangle + (offset to tl + offset to x) ”

Again, this overhead of jumping across various memory addresses due to a lack of locality of reference is proportional to the complexity of the object composition

Object copying/serialization

Another common problem with the indirection scheme is that it introduces the notion of shallow-copy v/s deep-copy. Most common implementations of object copying tend to do shallow copies. Explicit effort is usually needed to provide deep copy semantics. If in case, you have been reading this article as a C v/s Java thing, now is a good time to wake up. C++ programmers for example have always had to deal with “how deeply should we copy” problem whenever they chose to use an indirection scheme. In fact, the same is true even of C programmers except that they never had the option to overload the assignment operator and copy construction and hence, it always had to be controlled using documentation + special functions.

A variant of object copying is serialization. Serializing an object with indirections is usually a recursive descent problem given the non-continuous memory layout. Fully inlined objects can on the other hand can be serialized in one logical instruction that transfers a block of memory from one location to another destination.

No memory sharing

Interprocess shared memory is a powerful concept in latency sensitive applications. Multiple processes can get a consistent view (including immediate write visibility) without the overheads of IPC. The presence of pointers/references however makes it near impossible to share such objects across processes without implementing a userspace level virtual address manager. This is so because, it is usually infeasible to map a given object to the same address space across multiple processes. Failure to do so means the references/pointers are no longer valid.

So why go through all of this?

The most common reason these days for choosing indirection over inlining is that most programming languages no longer offer the choice and the reason is automatic memory management becomes easier once we assume each sub-object has a life of its own. Should you happen to have a choice, then the question is if the sub-object is really a part of the main object or just something that the main object collaborates with. In case of a collaboration, again we have to resort to indirection. That being said, there clearly are situations where it does help to do have inlined objects.